Modeling Neural Populations with Mixture Models

Modeling computation in the brain through Mixture Model parameter inference.

Abstract

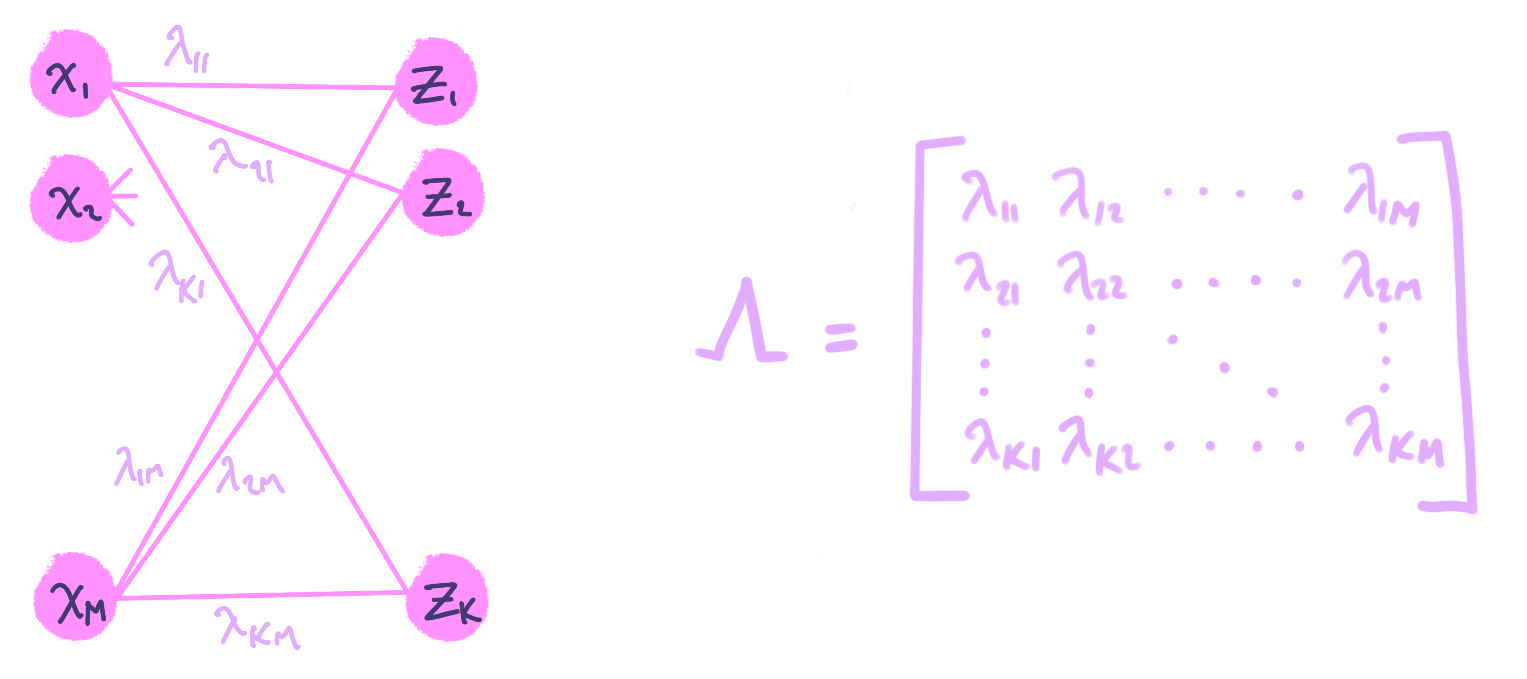

A Winner Takes it All Network (WTAn) is a special kind of artificial neural network for classification that has been studied in computational neuroscience because it provides a simple model for a common connectivity pattern in the brain cortex. Interestingly it has been shown that WTAns are analogous to the online K-means algorithm.

One of the known issues with online K-means/WTAns is the uneven membership assignment, where occasionally and depending on initialization one centroid is assigned responsibility over multiple clusters of data points while others are left without any.

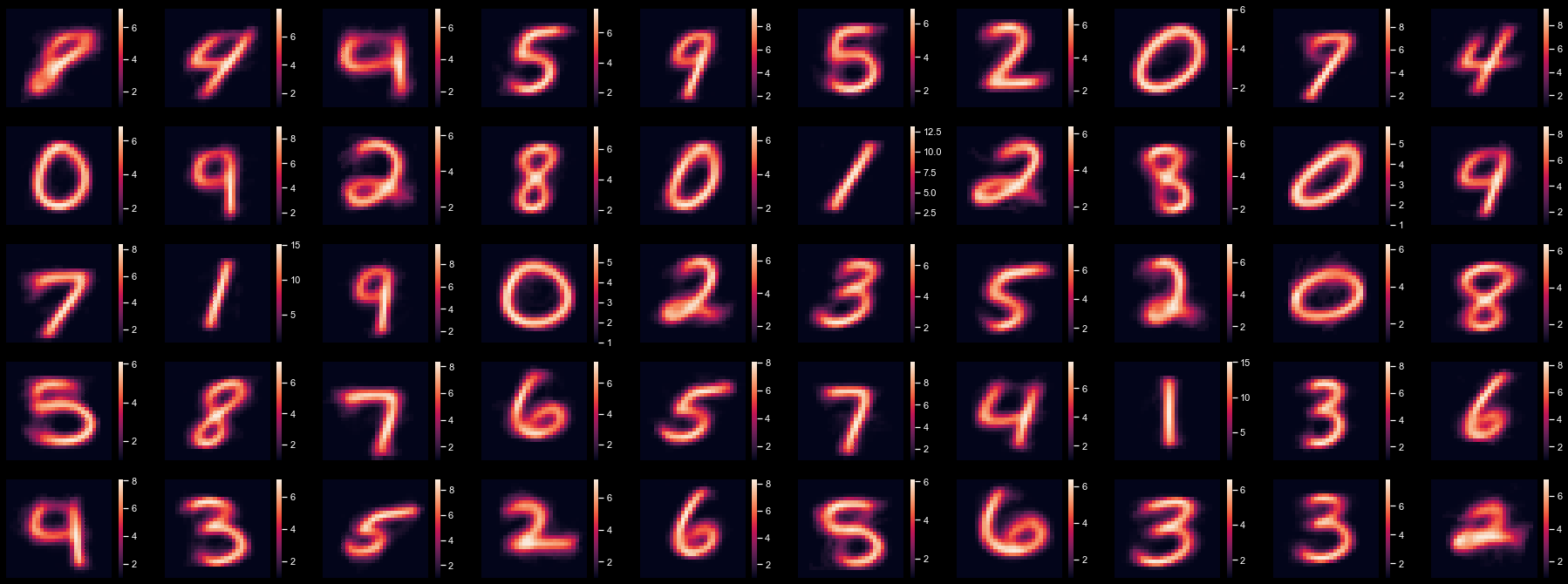

By generalizing the online K-means to a Gaussian Mixture parameter inference problem we find a natural solution to this problem without compromising the formulation of the problem using a WTAn. Furthermore using a Poisson Mixture Model we formulate an algorithm that we think is more fitting to represent neural populations and their dynamics.

Finally, we do some simulations and demonstrate the potential of the proposed algorithm. This work is very similar to “Keck, 2012” and “Moraitis T., 2022” specially in terms of the theory behind it, but the proposed algorithm differs from those proposed in these papers. We argue that the framework used here and in the aforementioned papers provide a probabilistic interpretation of the underlying learning process.

Want to know more?

- 🚀 Check out the GitHub repo. You can find a copy of the written report of the project there.

References

2022

- SoftHebb: Bayesian inference in unsupervised Hebbian soft winner-take-all networksNeuromorphic Computing and Engineering, Dec 2022

2012

- Feedforward Inhibition and Synaptic Scaling – Two Sides of the Same Coin?PLOS Computational Biology, Mar 2012Publisher: Public Library of Science